ELECTRONICS, PHOTONICS, INSTRUMENTATION AND COMMUNICATIONS

Relevance. Modern image processing techniques are focused on enhancing visual quality, particularly through adaptive local contrast enhancement. Previously, classical algorithms were employed to achieve high contrast efficiency; however, these approaches failed to account for the global scene context and often led to noise amplification. This paper proposes a hybrid method for adaptive local image contrast enhancement utilizing neural network-based parameter adjustment.

The aim of this research is to develop an algorithm that provides optimal contrast enhancement while minimizing noise artifacts and distortions, thereby improving contrast and real-time object detection accuracy.

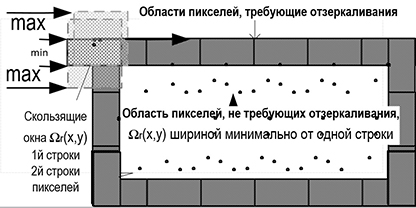

The essence of the proposed solution lies in employing a convolutional neural network for automatic configuration of local contrast parameters based on statistical brightness characteristics and textural image features. The proposed method incorporates image segmentation into local regions, analysis of their properties, and adaptive adjustment of processing parameters. This results in improved discernibility of low-contrast objects under various imaging conditions. The algorithm's operating principle is based on dynamically selecting local region dimensions and contrast parameters depending on background and target scene objects. The integration of a neural network module enables precise adjustment of processing parameters while minimizing undesirable artifacts such as halos and blockiness. The methodology has been implemented as software and hardware for an optoelectronic system designed for computer vision applications, aerial image processing, video surveillance systems, and locating victims in various disaster scenarios.

The scientific novelty of this work lies in the development of an algorithm that automatically regulates contrast parameters based on analysis of both global and local scene context using artificial intelligence.

The theoretical significance of the work consists in the development of a contrast enhancement algorithm and image quality assessment method that accounts for contrast perception characteristics by both humans and AI systems under challenging observational conditions, such as fog, smoke, low illumination, etc.

The practical significance of the developed algorithm is determined by its implementation of contrast enhancement for objects in images acquired in both visible and infrared spectral ranges, and by the reliability of their recognition using artificial intelligence.

Relevance. Currently in Russia and in the world there is an active transition from analog to digital technologies. This process is also fully applicable to radio broadcasting. In Russia, experimental digital radio broadcasting (DRB) is authorized in the VHF band in three formats: DRM, DAB, and RAVIS. However, the Government has not yet decided to choose one of these formats as the national standard, which is explained primarily by the insufficient completeness of the information required for the production and operation of equipment related primarily to the DRM system, the only DRB system recommended by ITU-R for being used in all frequency bands allocated for terrestrial radio broadcasting. This paper fills this gap as it relates to the development of receiving equipment.

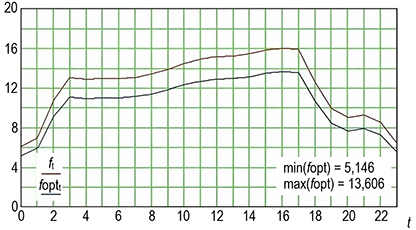

Purpose of this work: estimation of the required accuracy of the start moment of the direct discrete Fourier transform with respect to the beginning of the useful part of the OFDM symbols, where the error signal for the DRM system, while operating in the E stability mode, does not exceed the values required by the ITU-R standard and recommendation BS.1660-8 (06/2019).

Methods. As a basis to execute this research, the simulation model of the DRM system transceiver path for the stability mode E, was chosen. It is supplemented with modules that allow, for different types of modulation and protection levels, to change the time shift in the receiver between the start of the direct discrete Fourier transform and the beginning of the useful part of the OFDM-symbol, as well as to evaluate, at each value of the time shift, quality of the signal received by the DRM-receiver.

Results. The modulation error rate (MER) and bit error rate (BER) were chosen as the criteria for evaluating the quality of the received OFDM signal. It is shown that, in order to maintain conditions of a comfortable reception, the maximum allowable time mismatch between the start of the direct discrete Fourier transform and the beginning of the useful part of the OFDM-symbol, at modulation of subcarrier frequencies QAM-4, should not exceed 1,8 - 2,3 μs; at modulation QAM-16 this value should not exceed 0,8-1,3 μs. The range of values obtained is determined by the protection level PL. The main impact on the required accuracy of time synchronization of a DRM-receiver, while operating in the stability mode E, has the order of modulation QAM; significantly less influence has the level of protection PL if the probability of bit errors does not exceed 10-4. For BER≤10-4, the influence of the chosen level of protection PL can be neglected. The obtained results are new for the DRM system while operating in the E stability mode (VHF band).

Theoretical / Practical significance. The method of estimation of the acceptable time mismatch of OFDM-signal at DRM-reception is proposed. The results are necessary for the development of the time synchronization unit of digital radio broadcasting DRM-receivers.

Relevance. With the development of information technologies and the Internet of Things, the demand for more efficient and flexible mobile networks is increasing. Future wireless systems must not only ensure high speed and reliability of connections but also enable quick recovery of communication in emergency situations. Ground base stations (GBS) are typically installed stationary and are designed for long-term service, which limits their efficiency during sudden increases in traffic or infrastructure damage. In such conditions, aerial base station (ABS) emerge as a promising solution. Due to their mobility, affordability, and the ability to deploy quickly, they can support the operation of ground stations in high user density conditions or in emergencies when GBS are damaged or destroyed. This makes them an essential element of future communication networks.

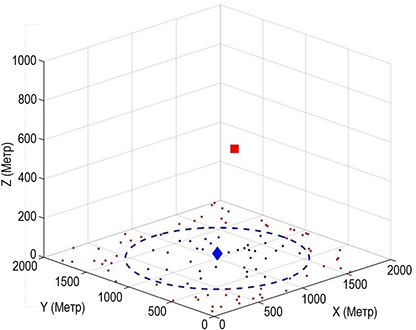

Problem Statement. Development of methods for the placement of ABS in three-dimensional space and the distribution of users and power among users with the goal of maximizing the data transmission speed of the systems.

Goal of the work. Increase the data transmission speed of systems using ABS to support GBS through optimal three-dimensional positioning of ABS, distribution of users between ABS and GBS, and power allocation among users.

Methods. The research was conducted using a dynamic approach, in which the coverage radius of the GBS is gradually reduced, along with the reinforcement learning algorithm. The analysis of the results showed the high effectiveness of the proposed method and allowed for a significant increase in data transmission speed within the framework of the task.

Scientific novelty. The scientific novelty of the proposed solution lies in the joint optimization of the placement of ABS and power allocation under resource constraints, which revealed a dependency between the coverage radius of GBS and the flight altitude of ABS. Specifically, as the coverage radius of GBS increases, the optimal flight altitude of ABS decreases, and vice versa.

Practical significance. The practical significance lies in the possibility of developing a methodology for planning public communication networks using ABS to support GBS under resource constraints. This approach makes it possible to ensure a high total data transmission rate and improve the reliability of network operation.

Relevance. Recognition of geometric primitives is used in image processing to solve problems related to the development of machine learning algorithms, reducing the analysis area and reducing computational complexity. One of the problems of primitives recognition is the resulting dependence from such external factors as: a wide range of changes in brightness, contrast and the interference caused by uneven lighting, foreign objects or pollution. A separate task is the geometric position estimation of the primitive in the image, which is defined by offsets, rotation and scale or parameters of a more complex mathematical transformation model. A wide class of tasks is not limited by the requirement of automatic processing in real time. Therefore, these problems can be solved by an interactive parameters setting. The interactive processing method ensures robustness to spatial-luminance distortions and various interferences.

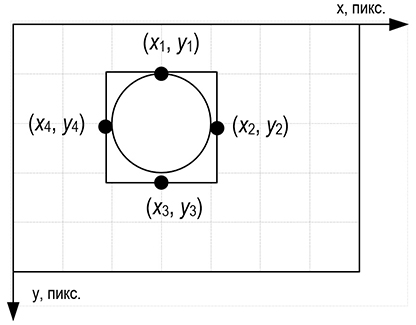

The article purpose is to improve the quality of recognition of geometric primitives (using the example of a circle) in images through interactive (visually controlled by the operator) processing.

The proposed solution essence is two processing stages: the stage of pre-processing in interactive mode and the stage of estimating the geometric parameters of the primitive with automatic removal of impulse noise. At the first stage, a threshold is selected for detecting the contour of a primitive and limiting the analysis area (selecting a fragment) in the image. These parameters are determined by a graphical interface in interactive mode (for example, changing the detection threshold almost instantly displays recognized contours in the image). At the second stage, in accordance with the shape of the primitive, an area of interest is selected, which removes impulse noise (contour points that do not belong to the primitive), and the parameters of the primitive are estimated based on the point of the area of interest using the least squares method. The developed algorithm has an implementation as a program with a graphical interface. Experiments to test the developed algorithm showed satisfactory recognition of the geometric primitive “circle” on various types of images containing a road sign, a polymer gel particle, and an ferrule end face. The scientific novelty of the solution lies in the possibility of recognizing primitives, which is robust to spatial-brightness transformations (scale, displacements, brightness unevenness, etc.) and other noise.

The theoretical significance lies in expanding the capabilities of recognition methods (in particular, primitives such as “circles”) through interactive selection of parameters at the preprocessing stage.

The practical significance lies in the simplicity of image processing algorithms that are used to solve applied problems (preparing machine learning data, processing by optical micrometry methods) that do not require real-time recognition.

Relevance. The rapid development of the Internet of Things technology has led to an exponential increase in the number of different intelligent hardware connected to the Internet, which, in turn, has contributed to the emergence of a large amount of data to be transmitted over communication systems. Electromagnetic communication networks have insufficient potential to solve this problem, and optical information transmission systems via atmospheric channels are one of the real options for solving this problem. However, optical atmospheric communication systems are subject to the influence of atmospheric factors, i.e. due to absorption, scattering, and diffraction, the laser beam is weakened in power and broadens. In well-known works on the divergence of the beam, the question of the dependence of the maximum divergence of the beam on such indicators as the power of the laser source, the geometric length of the channel, and the wavelength of optical radiation is investigated. At the same time, in these works, one channel of the laser communication network is considered, and the question of choosing the diameter of the laser beam in all channels of the laser multichannel atmospheric network is not discussed.

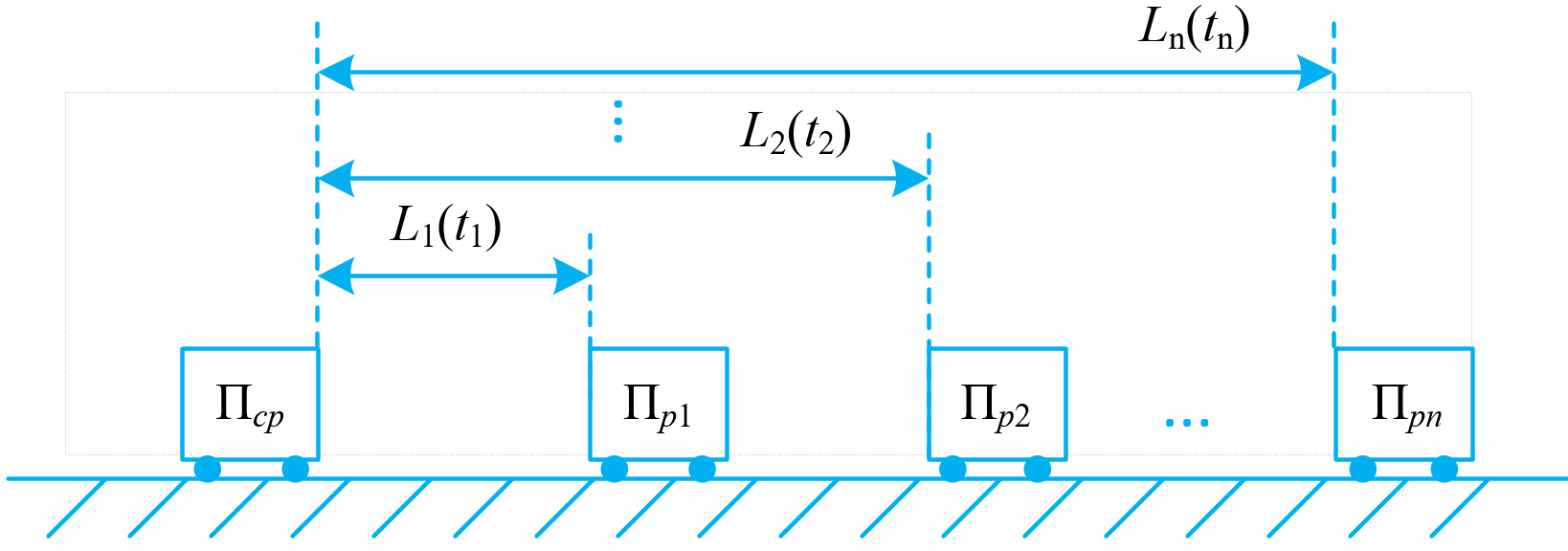

Purpose. The problem of optimal choice of the diameter of a laser beam in a multichannel atmospheric optical communication system of a distributed type is formulated, taking into account the divergence of the beam.

The essence of the proposed solution, unlike well-known works that solve the optimization problem taking into account the influence of beam divergence, the solution to this goal covers both stationary and mobile versions of the network channels of the entire multichannel system. The task was solved by forming a single target functional and further optimizing it in order to identify the optimal relationship between the radius of the beam at the input of the channel receiver and the radius of the beam at the output of the radiator when applied to the atmospheric channel under consideration, taking into account the possibility of its broadening. It is shown that such an expansion of the diameter of the laser beam, according to the optimal law identified, makes it possible to achieve a maximum of the average intensity of the laser beam transmitted through the atmospheric channel to all receivers of the system. The conducted model studies of the proposed method for accounting for beam diameter broadening confirmed the possibility of obtaining an optimal ratio between the main indicators of a multichannel laser atmospheric network.

Scientific novelty. A mathematical model for optimizing the choice of beam diameter in a multichannel laser atmospheric network has been developed.

Theoretical and practical significance. Optimization of the proposed model made it possible to obtain an optimal ratio between the main indicators of a multi-channel laser atmospheric network of a distributed type, which can be used in the construction of such systems.

Relevance. In long-haul decameter-band (LDB) communication systems, the number of bit errors is simultaneously influenced by two key factors: the signal-to-noise ratio and the degree of phase distortions caused by the Doppler shift, which arises from the random movement of ionospheric inhomogeneities. The challenge of improving the interference resistance of such systems is complicated by the fact that even with a high signal level at the demodulator's input, reception can be hindered by phase distortions, leading to a sharp increase in the number of bit errors and a degradation of the BER (bit error rate) coefficient. Despite the sufficient number of classical works, the problem of enhancing the interference resistance of modern domestic LDB radio communication systems under specified operating scenarios using contemporary methods and digital signal processing tools remains relevant and in demandich lead to a sharp increase in the number of bit errors and a deterioration in the BER coefficient. Despite a sufficient number of classical works, the problem of improving the noise immunity of modern domestic LDB or short-wave (HF) radio communication systems in specified operating scenarios using modern methods and means of digital signal processing (DSP) remains relevant and in demand.

The object of the research is modern domestic decameter-band radio communication systems, which often exhibit known shortcomings, including low adaptability to changes in the ionosphere and issues with signal interference. For example, the R-016 system has limitations in its frequency range, making it less effective under varying ionospheric conditions that affect signal levels. Prototypes may also have problems with signal processing, which leads to bit errors of up to 10-3 even in the absence of noticeable interference.

The subject of the study is the models and methods of functioning of LDB decameter-band communication lines.

The objective of the research is to evaluate the impact of various factors, such as changes in preamble length and the use of adaptive filters, on the interference resistance of the system. The analysis of the obtained results shows that increasing the preamble length in such systems contributes to enhancing the interference resistance of long-haul communication. The scientific novelty lies in the improvement of existing radio propagation models in the decameter range by applying a complex of parameters that includes the signal-to-noise ratio set for a specific communication session in the radio line, and to increase the accuracy of field strength calculations at the receiving point, recalculated values of critical frequencies based on electron concentration forecasts, as well as Doppler shift for each layer of the ionosphere. The practical significance of the results lies in the enhancement of the interference resistance of existing decameter-band communication systems in ionospheric propagation conditions.

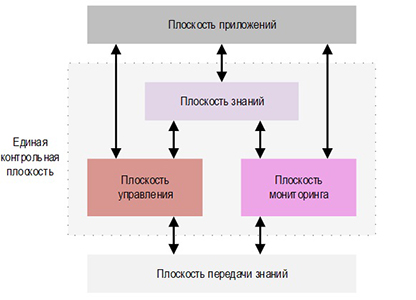

In this paper, the concept and architecture of Knowledge-Defined Networking (KDN) are explored as a new paradigm of network management that integrates artificial intelligence and machine learning to enable intelligent and adaptive network behavior.

The relevance of the research is driven by the limitations of traditional and Software-Defined Networking (SDN) systems in the face of modern challenges such as exponential traffic growth, dynamic conditions, and rising operational costs. KDN introduces a knowledge plane that optimizes resource allocation, automates decision-making, and enhances security in real-time. Despite the fact that today the SDN (Software-Defined Network) technology is very popular, in which the centralized control function allows to review all processes occurring in the network. At the time, its appearance really turned out to be a breakthrough, and now some experts are inclined to believe that the next stage of network evolution will be the Knowledge-Defined Network - a network defined by knowledge, operating on the basis of machine learning algorithms. Routing, resource allocation, network function virtualization (NFV), service function chaining (Service Function Chaining, SFC), anomaly detection, network load analysis - all these points can be taken on by KDN. The study aims to examine the structural and functional features of KDN and analyze the interaction of its five logical planes ‒ data, control, monitoring, knowledge, and applications ‒ to achieve a high degree of automation and adaptability.The research methods include literature analysis, conceptual modeling, and a comparative evaluation of KDN and SDN architectures.

The results. The study analyzed the architecture of KDN, comprising five logical planes: data, control, monitoring, knowledge, and applications. The findings demonstrate that integrating the knowledge plane significantly enhances automation and adaptability within the network.

The novelty of this work lies in being one of the first attempts to conduct a systematic analysis of the Knowledge-Defined Networking (KDN) concept in the context of Russian-language scientific literature. The research addresses an existing gap in domestic science, offering a unique perspective on KDN capabilities considering local conditions and applications.

The theoretical significance of the work lies in establishing a foundation for the study and integration of machine learning methods into network management systems.

INFORMATION TECHNOLOGIES AND TELECOMMUNICATION

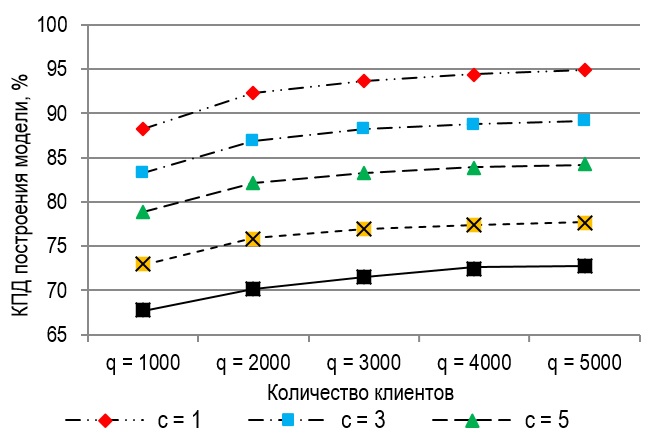

Currently, data mining based on machine learning plays a key role in decision support in various industries. An important practical problem of machine learning is the implementation of object classification in real time, which can be achieved by parallelizing data processing algorithms both for input data and for decision function data. To improve the efficiency of parallelizing machine learning methods, a unified decision function model has been developed. The Relevance of this research is to present a unified decision function model in the framework of machine learning algorithms and functions for its parallelization both in terms of input data and decision function data.

The essence of the presented approach is that the features of the operation of metric methods of machine learning are analyzed, independent data for processing are presented using different categories of the analyzed property, developed decision function model describes the object features for input data and decision function data using standardized elements and including functions for their parallel processing based on group parallelization of objects. The proposed approach is based on the use of methods for analyzing algorithms and computational complexity, mathematical statistics and the methodology of designing parallel algorithms.

Experiments have shown that parallelization of the proposed decision function model for the potential function method allows increasing the classification efficiency for one object using additional computing resources, and for a group of objects within the limits of the computer's memory size or planning horizon.

The novelty of the proposed approach is that the model differs from existing ones in a method of formalizing objects and their features using unified elements for training and classification objects and has a structure and functions oriented towards its parallel processing by pattern recognition methods based on decision functions within the framework of group parallelization of objects.

Theoretical significance: the model is unified and can be used to parallelize other pattern recognition methods that can be described by similar parameters, architecture, and classification features.

The practical significance of the proposed approach is that the model allows decomposing the pattern classification problem into separate subtasks of finding regularities between input data and decision function data.

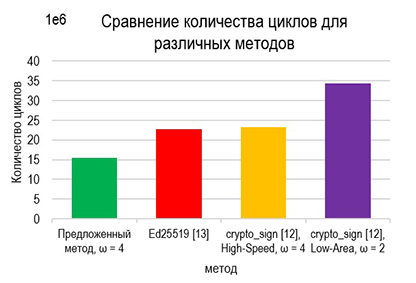

Relevance. Ensuring secure cryptographic operations in resource-constrained environments presents challenges due to limited computational power and memory. With the rapid growth of Unmanned Vehicle Systems, the need for efficient and secure cryptographic solutions is increasing. Optimizing cryptographic algorithms for such systems becomes especially relevant given their limited computational resources and high security demands.

The purpose of this study is to optimize Elliptic Curve Cryptography (ECC) digital signature operations for resource-limited systems, particularly in unmanned vehicle systems. The research aims to enhance computational efficiency and reduce memory usage, making ECC-based security mechanisms more feasible for embedded applications.

The novelty of this study lies in its integration of multiple optimization techniques. It improves scalar point multiplication by leveraging cyclic group properties, additive inverses, and an enhanced windowed multiplication method. Additionally, it introduces a deterministic nonce generation approach inspired by EdDSA to further refine digital signature efficiency. These innovations collectively contribute to a more efficient cryptographic process suitable for constrained environments.

The theoretical significance lies in the development of a new mathematical apparatus that makes it possible to optimize electronic signature operations.

The practical significance of this study is its applicability in low-power embedded systems, where computational and memory resources are highly limited. By optimizing ECC operations, this research enhances the security and performance of cryptographic implementations in unmanned vehicle systems and similar embedded applications, ensuring secure communications without exceeding hardware constraints.

The proposed method was implemented on the Arduino Atmega 2560 R3, achieves up to a 54,1 % results are showing reduction in cycle count and a 72,6 % decrease in SRAM usage for key generation, alongside significant performance improvements in signing and verification processes. Experimental results confirm its effectiveness in optimizing ECC operations for constrained devices of unmanned vehicle systems.

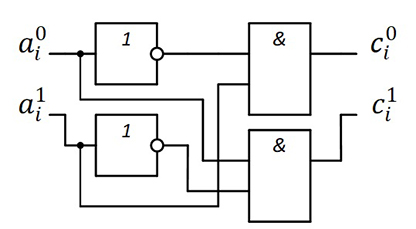

Relevance. One of the unsolved problems of the theory of error-correcting coding is the problem of constructing decoders of long codes with low computational complexity. From the point of view of algebraic coding theory, the cornerstone for this is the operation of multiplying two polynomials a and b over the field GF(qk) modulo the third polynomial g. As q and k increase, the use of methods for calculating the field convolution based on the logarithm and antilogarithm operations becomes ineffective due to the use of a large amount of memory for constructing tables. Simplified implementations of the field convolution using the asymmetry of the accompanying matrix and analytical (non-tabular) methods of logarithm and antilogarithm using Zhegalkin polynomials have been developed only for q = 2. Multipliers based on shift registers have a significantly lower speed for large q and k.

The aim of the study is to find options for reducing the computational complexity of the field convolution operation in multivalued extended Galois fields during its synthesis in the logical basis "AND"‒"OR"‒"NOT".

Methods. An analysis of single-cycle methods for multiplying elements of a multivalued extended Galois field specified in vector or polynomial form for various power bases is carried out. Examples of calculating field convolutions in multivalued Galois fields by various methods are given. The structure of the considered type of fields is studied.

Results. It is shown that addition and multiplication operations in the field GF(q) synthesized on the elements of the logical basis "AND"‒"OR"‒"NOT" make the main contribution to the complexity of the resulting logical circuit. It is revealed that using the property of decomposition of the field GF(qk) into subsets by the power of the primitive element of the field GF(q) allows to reduce the number of multiplication operations. A field convolution method based on the matrix method and the Hankel ‒ Toeplitz transform is proposed, taking into account the field structure, which allows to reduce the total number of logical elements and increase the performance of the designed circuit solution, namely, to reduce the Quine price and the circuit rank. A comparative assessment of the developed method is given.

Scientific novelty. For the first time, a method of field convolution of two vectors in the field GF(qk) is proposed, one of which is presented in the indicator form.

Theoretical / Practical significance. A new method for calculating the field convolution based on the decomposition of a multivalued extended Galois field is proposed. Reduction of the total number of logical operations is proved. The proposed solution can be used in the synthesis of encoding and decoding devices for multi-valued (symbolic) codes on binary logic elements.

ISSN 2712-8830 (Online)